You’ve probably told ChatGPT (or other AI tools) to “write a readme file,” “generate a feature,” or “summarize a document,” and then… it did.

Kind of.

Sort of.

Not really.

The tone was off.

The format wasn’t what you expected.

And it felt like AI was giving you what it wanted — not what you wanted.

You’re not alone. Most people throw tasks at AI, expecting magic.

But here's what no one told you: AI isn't confused. You are.

GPT, Claude, Gemini— they’re all trained on billions of data points. But they’re not mind readers.

When you say:

“Summarize this doc.”

AI is like:

“Sure. But like… how do you want it?”

That’s the whole game.

You’re either giving it instructions with zero context and hoping for the best…

Or, you’re teaching it how to think like you — one prompt at a time.

And that’s where Zero-shot and Few-shot learning come in.

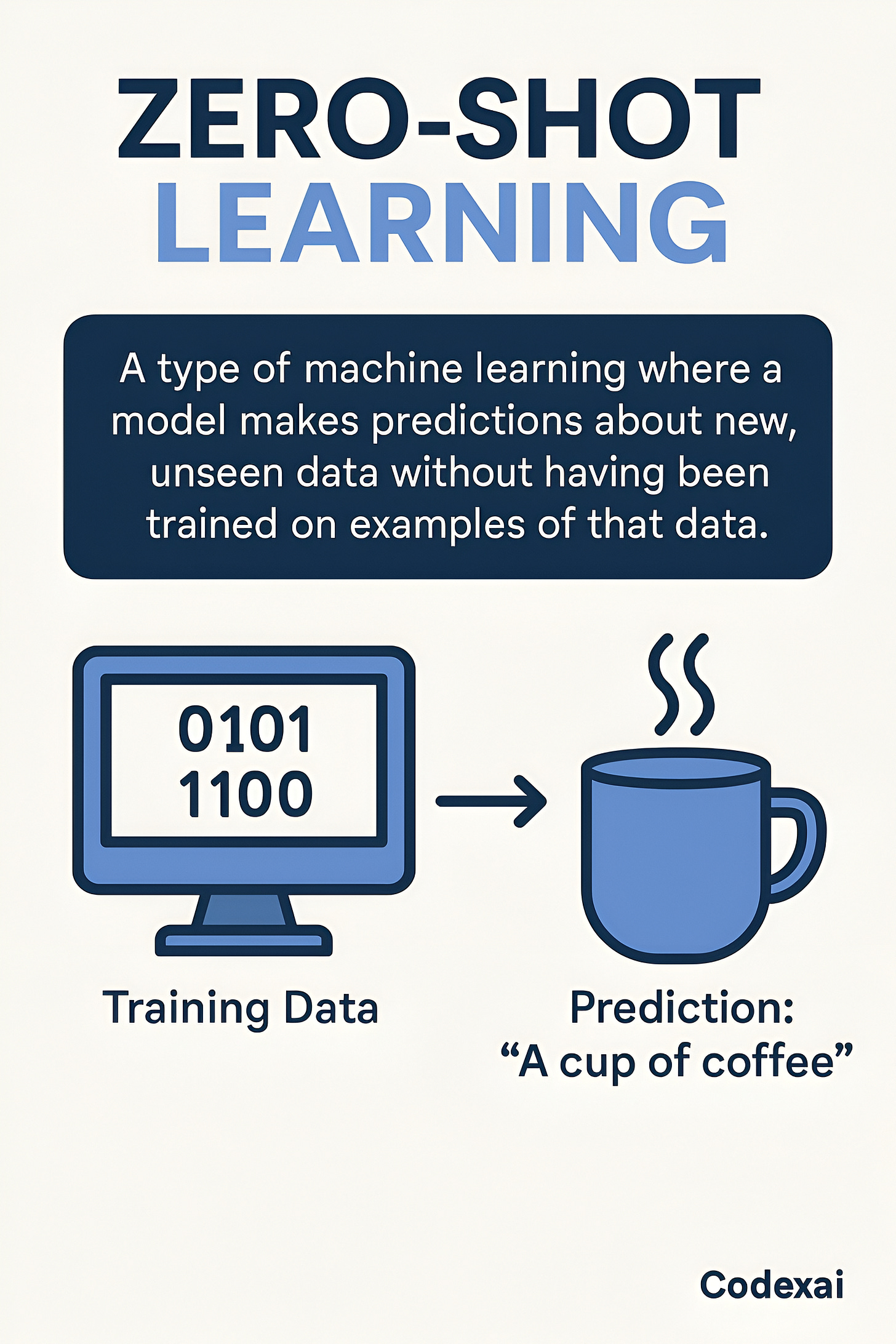

Zero-shot Learning

Let’s say you walk into a room, hand someone a guitar, and say:

“Play something emotional.”

If they’re a pro, they’ll give you something. But will it match your vibe? Your taste? Your tempo? Probably not.

That’s Zero-shot learning.

You give no examples. Just a vague instruction and expect the system to “get it.” In prompt terms, that looks like:

“Write a Twitter thread about startup failure.”

Sounds clear.

But is it:

A personal story?

A list of mistakes?

A breakdown of a viral case study?

AI will guess. And you’ll probably shake your head. Because Zero-shot is a guessing game with a smart robot. And while it often gets close, close is rarely what you want when you’re trying to build something that actually works.

Few-shot Learning

Now switch it up. You give the same person a guitar and say:

“Here’s the kind of song I like.

This one’s soft. That one’s minimal. This third one builds up slowly.”

Then you say:

“Now play me something like that.”

You didn’t say “do this exact thing.” But you gave just enough context for them to understand your taste, format, rhythm, tone. That’s Few-shot learning.

You show the AI a few examples, and then ask it to follow the pattern.

Example prompt:

“Here’s a thread I wrote on product-market fit:

[insert text]

Here’s another I liked from Twitter:

[insert text]

Now write a new one about founder burnout using a similar tone.”

That’s not just prompting. That’s guiding. And 9 times out of 10 — it works better.

Why People Keep Failing With AI (Even Smart Developers)

Because they use Zero-shot by default.

It’s quick. It feels “cleaner.”

They don’t want to clutter up the prompt box.

But here’s the irony:

You either spend 30 seconds giving a good example...

...or you waste 5 minutes editing AI’s bad output.

Your choice.

Let’s Break It Down Technically (But Keep It Simple)

AI tools like GPT don’t “learn” in real-time.

When you give it a prompt, it’s not updating itself.

It’s just processing what you gave — and trying to continue the pattern.

So when you:

give no examples = it falls back on training data = Zero-shot

give 2–5 relevant examples = it mimics that = Few-shot

The “learning” isn’t real learning.

It’s more like pattern imitation inside a single conversation.

That’s why good prompt writers get shockingly good results — even when the task is weird or complex.

They don’t write longer prompts.

They just write better ones.

⚠️ But Wait... What’s One-Shot Learning Then?

Just one example.

You show the AI a single format and then ask it to follow it.

Kind of like giving someone a template and saying,

“Do that again. Different topic. Same style.”

Works decently.

But with only one reference, AI might latch onto the wrong parts.

It’s like showing a child one way to tie shoes and hoping they figure it out for every shoe in the world.

Your New Mental Model

Every time you give AI a task, ask yourself:

“Am I assuming it knows what I mean by this request?”

If the answer is yes — back up.

Give it an example or two.

This shift alone will 3x the quality of your outputs.

And let’s be honest— if you're using tools like Gemini, Claude, GPT in your dev workflow, you don’t have time to babysit AI’s guesses.

Off-topic but You’ll Love This

You ever notice how people who prompt like pros don’t brag about it?

They just get stuff done faster.

They automate workflows.

They build apps in half the time.

It’s not magic.

It’s not a secret skill.

It’s knowing how to talk to the machine so it talks back the way you want.

Most people are out here treating GPT like Google. But you?

You’re learning to treat it like a teammate— one that listens, adapts, and executes.

Here’s What You Should Do Next

Create a tiny “Prompt Swipe File.” Just a Google Doc or Notion page.

Every time you write a good prompt that worked:

Save it.

Label the task.

Note whether it was Zero-shot, One-shot, or Few-shot.

Over time, you won’t just use AI better — you’ll build your own personal AI OS.

If this made prompting clearer for you, do yourself a favor:

Bookmark this article

Or better, reply and tell me which style you’ve been using until now — I reply to every Codexai reader. And if someone in your team or friend circle is still using ChatGPT like Google…

Forward this to them. Their results will thank you later.

PS: Got a favorite AI prompt or an AI tool you swear by?

🚧 Codexai is where code, AI, and software creation collide—with practical tutorials, real dev stories, and indie SaaS insights written like an older brother guiding you through the chaos.

No AI hype. No fluff.

Just real-world guidance for smart builders like you.

Join developers turning AI-tools into their personal assistant.

📩 Subscribe now**—**to get every issue straight to your inbox 100% free!

Manas xx! 🥂